The overwhelming ‘Whiteness’ of artificial intelligence – from stock imagery to film robots and the dialects of virtual assistants – is removing people of colour from humanity’s visions of its high-tech future.

In their paper, “The Whiteness of AI” published today in the journal, Philosophy and Technology, Leverhulme CFI Executive Director, Stephen Cave and Dr Kanta Dihal offer insights into the ways in which portrayals of AI stem from, and perpetuate, racial inequalities.

Cave and Dihal cite research showing that people perceive race in AI, not only in human-like robots, but also in abstracted and disembodied AI. Furthermore, these perceptions can impact behaviour, as demonstrated in work showing that ‘Black’ robots receive more online abuse than White counterparts.

The authors also suggest a variety of ways that a consistently White portrayal of AI can amplify discrimination, including by: sustaining a racially homogenous workforce, perpetuating oppressive narratives of White superiority, misrepresenting the opportunities and risk of AI, and creating new power hierarchies that place ‘White’ machines in a position of power over non-White humans.

AI as both white and White

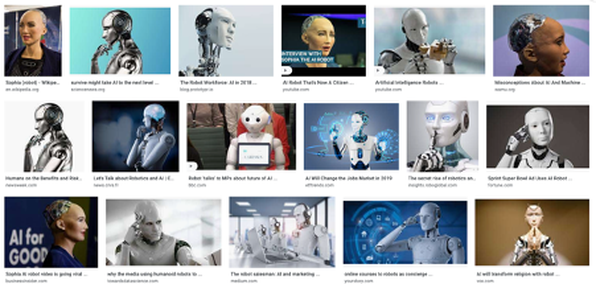

Dr. Dihal, leader of the Centre’s Global AI Narratives and Decolonising AI projects explains that it is unsurprising that a society which has promoted the association of intelligence with White Europeans for centuries, would imagine machine intelligence also as White. Consider Sophia: a humanoid created in Hong Kong, given citizenship in Saudi Arabia, and declared an “innovation champion” by the UN development programme -- she is distinctly Caucasian.

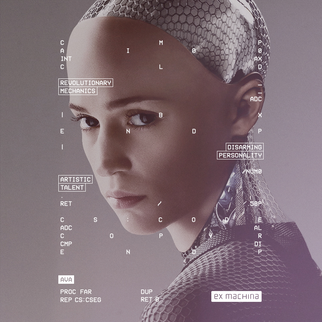

Ex Machina Social by Watson Design Group. Licensed under CC BY-NC 4.0

However, non-white racialisations are not merely excluded from visions of the future. Crude non-white racial stereotypes can be found applied to extraterrestrials, such as the Flash Gordon villain, Ming the Merciless, or the Caribbean caricature of Jar Jar Binks. In contrast, AI is portrayed as White as it is associated with the same attributes that have been used to justify colonialism in the past: superior intelligence, professionalism and power.

The Terminator by V. Sarela.Licensed under CC BY-NC 4.0

“From Terminator to Blade Runner, Metropolis to Ex Machina, all are played by White actors or are visibly White onscreen. Androids of metal or plastic are given white features, such as in I, Robot. Even disembodied AI – from HAL-9000 to Samantha in Her – have White voices. Only very recently have a few TV shows, such as Westworld, used AI characters with a mix of skin tones” explains Dihal.

In their paper, Cave and Dihal highlight that even works based on slave rebellion, such as Blade Runner, depict their AIs as White. As Dihal puts it, “AI is often depicted as outsmarting and surpassing humanity. White culture can’t imagine being taken over by superior beings resembling races it has historically framed as inferior.”

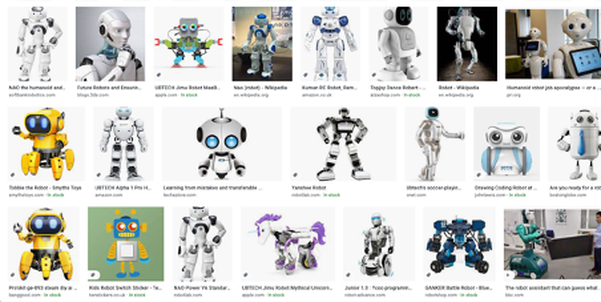

As part of their research, Cave and Dihal found that search engine results of non-abstract images for ‘AI’ had either Caucasian features or were literally the colour white.

Combined image search results for “Robot” and “AI Robot” in Google

as of 5 August, 2020.

Danger, Will Robinson - The Risks of Homogeneity

Beyond reflecting and perpetuating existing inequalities, Cave and Dihal warn of further dangers in allowing this pattern of racial homogeneity to persist. Dr. Dihal explains:

“People trust AI to make decisions. Cultural depictions foster the idea that AI is less fallible than humans. In cases where these systems are racialised as White, that could have dangerous consequences for humans that are not.”

“Portrayals of AI as White situates machines in a power hierarchy above currently marginalized groups, and relegates people of colour to positions below that of machines. As machines become increasingly central to automated decision-making in areas such as employment and criminal justice, this could be highly consequential.”

Breaking the pattern

Arguably, a first step in breaking the pattern of racially biased AI portrayals, is to make it visible. The authors explain that the normalisation of Whiteness in our society makes that Whiteness invisible. As stated in the paper, “The majority of White viewers are unlikely to see human-like machines as racialised at all, but simply as conforming to their idea of what human-like means. For non-White people, on the other hand, Whiteness is never invisible in this manner.”

By making obvious the representation choices and power hierarchies built into current visions of AI, Cave and Dihal hope to contribute toward the reconstruction and decolonisation of what it means to be human -- an objective central to efforts on decolonising AI.